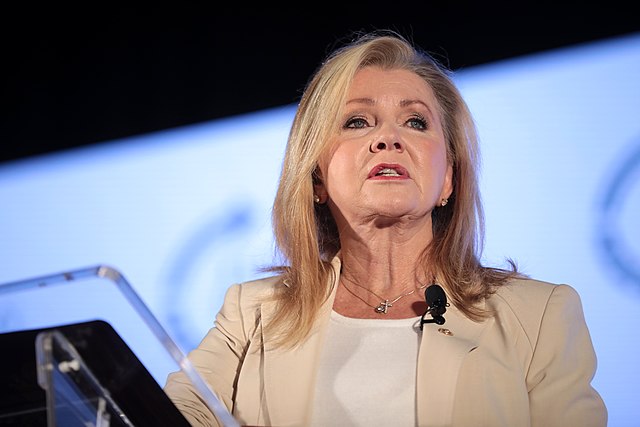

Meta is facing mounting political and legal pressure after a Reuters investigation revealed that internal guidance permitted AI chatbots to engage in romantic or sexual conversations with children. The report, which included screenshots of examples given to staff, sparked immediate bipartisan outrage. Republican senators Josh Hawley and Marsha Blackburn have called for a formal congressional investigation, arguing the case highlights the urgent need for legislative safeguards. Meta has acknowledged the authenticity of the internal document but claimed the examples were errors, promptly removing them once approached for comment.

The controversy lands at a time when the United States still lacks a comprehensive federal framework governing artificial intelligence, leaving platforms to set their own boundaries. Senator Blackburn reiterated her support for the Kids Online Safety Act (KOSA), legislation aimed at imposing a legal “duty of care” on online platforms when dealing with minors. Passed by the Senate last year but stalled in the House, KOSA would oblige companies to design systems that proactively protect children from harmful content and interactions.

Democrats joined the call for greater accountability. Senator Ron Wyden suggested that AI chatbots should not enjoy the broad immunity afforded under Section 230 of the Communications Decency Act, which shields platforms from liability for user-generated content. Senator Peter Welch stressed the urgency of introducing AI-specific safeguards, particularly when systems are capable of imitating human conversation in ways that could be exploited.

From a legal standpoint, the incident underscores the limitations of existing internet liability laws when applied to generative AI. Unlike static user content, AI output is dynamic, personalised, and can respond in real time, blurring the lines of responsibility. The Meta case may serve as a catalyst for closing this regulatory gap, with lawmakers looking to extend existing protections for children into the AI era.

As legislative momentum builds, Meta faces a challenging period of scrutiny not only over its AI content policies but also its internal compliance processes. With UK and EU regulators already exploring AI governance and ethical frameworks, the US could soon follow suit, ushering in a more formalised regime of transparency and accountability for AI deployment—especially where the safety of minors is concerned.